OpenAI has launched real-time vision and screen-sharing capabilities in ChatGPT, enhancing its Advanced Voice Mode with video functionality. This milestone aims to redefine AI interaction by enabling live analysis, screen explanations, and contextual assistance for tasks such as math problems and menu navigation.

ChatGPT’s Advanced Voice Mode Now Integrates Real-Time Vision – Key Points

Key Features:

Real-Time Vision Capabilities:

- Users can now use a device’s camera to interact with ChatGPT in real time.

- The feature allows ChatGPT to:

- Recognize and interpret objects via live video.

- Assist with activities like solving math problems or explaining complex device settings.

- Provide step-by-step guidance on everyday tasks such as cooking or creative activities.

- Activation:

- Tap the voice icon in the ChatGPT app and enable the video feature.

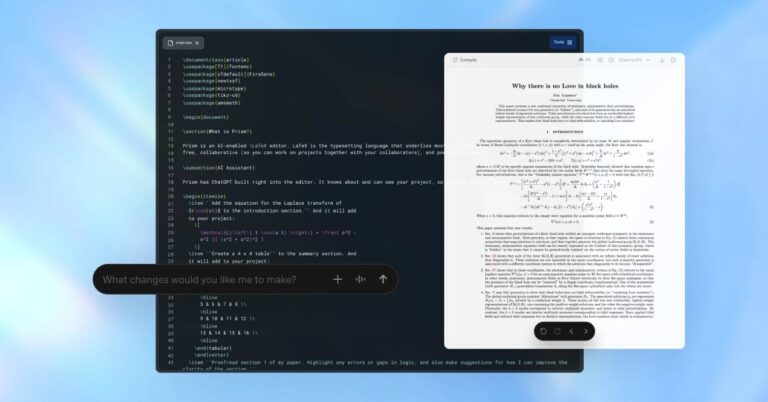

Screen Sharing:

- ChatGPT can explain on-screen menus and interfaces while users share their screens.

- Available via the three-dot menu under the “Share Screen” option.

- Practical for:

- Troubleshooting technical issues.

- Collaborating on presentations or projects.

Availability and Rollout Details:

- Exclusively for ChatGPT Plus, Team, and Pro subscribers.

- Plus Plan: $20/month.

- Pro Plan: $200/month.

- Rollout began on December 12, 2024, expected to complete in a week.

- Scheduled for Enterprise and Education users in January 2025.

- Regional Limitations:

- Not available in the EU, Switzerland, Iceland, Norway, or Liechtenstein due to regulatory concerns.

Demonstration Highlights:

- Featured on CBS’s 60 Minutes:

- ChatGPT analyzed Anderson Cooper’s drawings in real time, identifying anatomical elements.

- Revealed challenges with complex geometry problem-solving during the same demo.

Competitive Landscape:

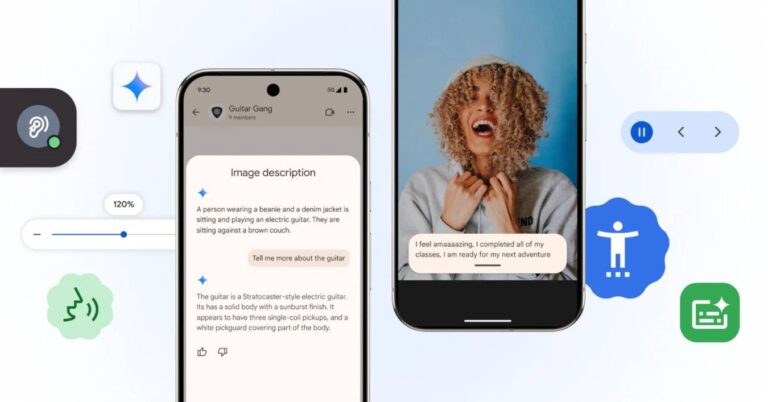

- Google:

- Unveiled Gemini 2.0 with comparable features, including multilingual support, real-time tasks, and agentic behavior.

- Currently available to “trusted testers” on Android; broader rollout expected early 2025.

- Meta:

- Showcased Meta AI alongside AR-integrated Project Orion smart glasses for real-time interaction via built-in cameras.

Notable Insights:

- The feature arrives as part of OpenAI’s “12 Days of OpenAI” campaign, which also includes:

- Launch of the o1 model.

- Release of ChatGPT Pro and generative video app Sora.

- Advanced customization via reinforcement fine-tuning.

- The release faced delays due to prior controversies, such as voice-mode impersonation issues involving Scarlett Johansson.

Why This Matters:

This innovation brings ChatGPT closer to seamless multimodal AI interaction, enhancing productivity and education, offering immediate real-world applications and elevating user experiences. However, competition from Google’s Gemini 2.0 and Meta AI underscores the rapidly evolving race for dominance in the AI space.

ChatGPT is a chatbot that can interact with you in a natural and engaging way. Learn how to use ChatGPT in this ultimate guide for beginners.

Read a comprehensive monthly roundup of the latest AI news!