To mark Global Accessibility Awareness Day 2025, Google enhances AI accessibility across Android and Chrome with a robust set of updates powered by its Gemini model. These improvements transform how users with vision, hearing, and speech impairments interact with content, enabling intuitive screen reading, emotional captioning, and inclusive testing environments.

Google Enhances AI Accessibility Across Android and Chrome – Key Points

Gemini Integration with TalkBack (Vision Accessibility):

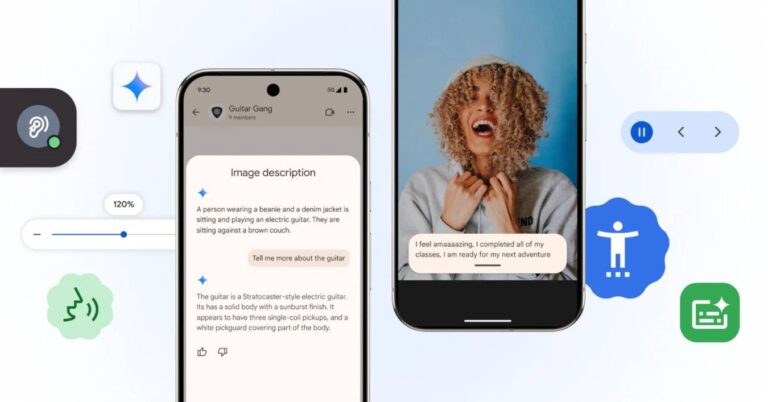

As part of the initiative where Google enhances AI accessibility, Android’s TalkBack now offers richer interaction through Gemini:

- Users can ask follow-up questions about images—e.g., “What color is this car?” or “What brand is this guitar?”

- Entire screen content can be queried, such as detecting discounts or reading news headlines.

- Descriptions are delivered via speech, vibration, or sound cues for users with visual impairments.

Expressive Captions (Hearing Accessibility):

Another area where Google enhances AI accessibility is through Expressive Captions:

- Captions now reflect emotion, duration, and tone: e.g., “GOAAAAAL!” or “Oh nooooooo!”.

- Tags like [whistle], [applause], and [clearing throat] improve context and clarity.

- Available in English on Android 15+ in the U.S., U.K., Canada, and Australia.

Project Euphonia Expansion (Speech Accessibility):

In its effort to support speech diversity, Google enhances AI accessibility by:

- Providing open-source resources on GitHub for custom audio tool development.

- Partnering with University College London to create datasets in 10 African languages.

- Empowering the development of inclusive speech recognition tools worldwide.

Chromebook Accessibility for Students (Education & Mobility):

For education access, Google enhances AI accessibility within the Chromebook environment:

- Face Control enables gesture-based navigation.

- Reading Mode personalizes visual text formatting.

- Full compatibility with the College Board’s Bluebook testing app, integrating tools like ChromeVox and Dictation to ensure testing accessibility.

Chrome Browser Enhancements (Web Accessibility):

Chrome now includes critical updates that simplify access to complex web content:

- OCR allows screen readers to read text from previously inaccessible scanned PDFs. Users can now highlight, search, copy, and listen to text.

- Page Zoom on Android preserves layout while scaling text—customizable per page or globally via the Chrome menu.

Why This Matters:

By embedding AI into core accessibility functions, Google enhances AI accessibility in ways that go beyond compliance. These tools allow individuals with disabilities to navigate and consume content with control, context, and confidence. Google’s initiative sets a new standard in inclusive technology by demonstrating how AI can bridge digital divides.

Google introduces Gemini Code Assist for Individuals, offering 180,000 free code completions monthly, challenging GitHub Copilot’s dominance in AI-powered developer tools.

Read a comprehensive monthly roundup of the latest AI news!