Harvard University, in partnership with Google, launched the 1 Million Books Initiative on December 12, 2024. Spearheaded by the Institutional Data Initiative (IDI) and funded by OpenAI and Microsoft, this groundbreaking project aims to democratize access to high-quality training data for large language models (LLMs). The dataset, derived from Google’s extensive digitization efforts, encompasses a diverse collection of public domain works, offering a legally sound and ethical resource for AI training.

Harvard’s 1 Million Books Initiative – Key Points

Key Features of the Dataset

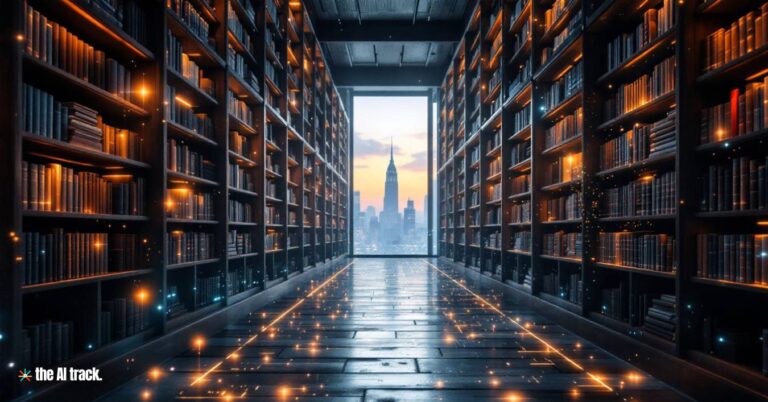

Content Diversity:

Harvard’s 1 million books initiative includes seminal works by authors like Shakespeare, Dickens, and Dante, alongside niche materials such as mathematics textbooks, technical manuals, and dictionaries in lesser-used languages.

Volume and Scope:

Approximately one million public domain texts spanning centuries, genres, and languages, ensuring wide applicability for natural language processing (NLP), translation models, and educational AI tools.

Goals and Vision

Democratization of AI Training:

IDI aims to lower the barriers for AI researchers and startups, traditionally unable to compete with large corporations due to the high costs of acquiring vast training datasets. The initiative seeks to “level the playing field,” according to Greg Leppert, IDI’s executive director.

Setting Ethical Standards:

By exclusively using public domain materials, the initiative avoids the legal and ethical controversies that have plagued companies like OpenAI and Meta, which face lawsuits for unauthorized use of copyrighted content.

Legal and Ethical Context

Public Domain Exclusivity:

The project strictly adheres to public domain content, eliminating risks of copyright infringement and sidestepping ongoing controversies surrounding the use of copyrighted material in AI training. This is particularly relevant as companies like OpenAI and Meta face lawsuits from major publishers for alleged misuse of copyrighted data.

Global Influence:

IDI’s approach mirrors international efforts, such as Iceland’s initiative to preserve its language and culture in AI models by digitizing its national library collections.

Challenges and Limitations

Historical Focus:

The dataset primarily comprises historical texts, limiting its relevance for models that require contemporary data, colloquialisms, or domain-specific terminology.

Scale vs. Industry Needs:

While significant, the dataset represents only a fraction of the billions of tokens required for state-of-the-art LLMs. Augmentation with modern datasets remains necessary.

Broader Industry Impact

Addressing Data Scarcity:

With platforms like Reddit and X (formerly Twitter) restricting access to valuable data, this initiative provides a cost-free, high-quality alternative for academic and smaller-scale developers.

Boosting AI Innovation:

By reducing dependency on expensive proprietary datasets, Harvard’s initiative fosters innovation and levels the competitive landscape, especially for startups and academic researchers.

Future Implications

A Blueprint for Others:

IDI’s ethical and legal framework could inspire other institutions to contribute to open-access AI training resources.

Advancing Inclusive AI:

By incorporating underrepresented languages and texts, this initiative addresses biases in AI models, making them more inclusive and reflective of global diversity.

Harvard’s 1 Million Books Initiative represents a landmark step in making artificial intelligence’s development more transparent, ethical, and accessible. While it cannot entirely satisfy the massive data requirements of modern Large Language Models (LLMs), its emphasis on democratization and legal clarity sets a precedent for responsible AI innovation.

The AI landscape is increasingly defined by the contrasting approaches of open source and closed source models. This article examines the nuances of each approach, exploring their benefits, challenges, and implications for businesses, developers, and the future of AI.

Read a comprehensive monthly roundup of the latest AI news!