Meta Launches Standalone AI App that integrates Llama 4 and voice-first interaction to offer a personalized assistant experience across platforms and devices, including glasses and desktop. With an industry-first social Discover feed, full-duplex voice tech, and ambitious personalization, Meta raises the bar in AI personal assistants.

Meta Launches Standalone AI App – Key Points

Standalone Meta AI App Launch (April 2025):

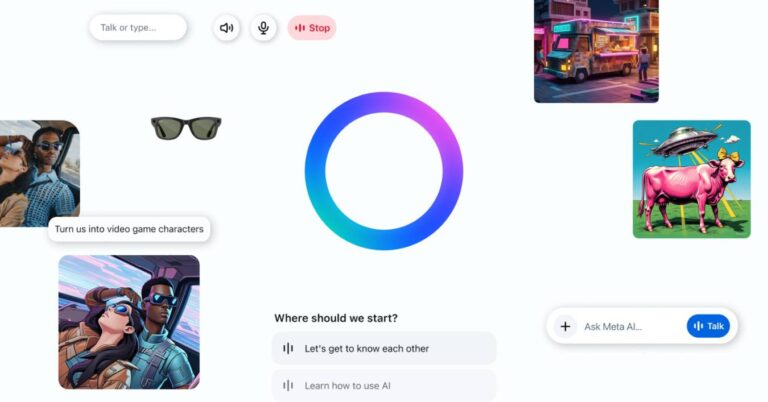

Meta Launches Standalone AI App built on its Llama 4 model, designed for more natural voice conversations and deeper personalization. It offers cross-platform integration (Instagram, WhatsApp, Messenger, Facebook) and replaces the Meta View app for Ray-Ban glasses. The app was introduced at Meta’s inaugural AI developer event, LlamaCon, in Menlo Park.

Voice-First AI Assistant with Duplex Speech:

A full-duplex voice demo allows real-time voice interaction, simulating human dialogue with overlapping speech and dynamic turn-taking. This beta voice mode stems from Meta’s research on synchronous LLMs. The assistant is available in the US, Canada, Australia, and New Zealand.

Personalization Through Meta Ecosystem:

The app uses data from Facebook and Instagram via the Accounts Center to adapt responses based on user behavior. Meta Launches Standalone AI App with personalized features, remembering preferences like dietary needs or travel interests. These features are currently active in the US and Canada.

Discover Feed and Prompt Sharing:

Meta Launches Standalone AI App with a built-in Discover feed, turning AI use into a social experience. Users can share prompts, remix content, and engage with community ideas, including trends like emoji-based self-descriptions and Ghibli-style transformations. Sharing remains opt-in.

Integration with Ray-Ban Meta Glasses:

The app merges with Meta View, allowing seamless transitions between smart glasses and app use. Object recognition, live translation, and upcoming HUD-enabled models are part of the ecosystem. Conversations initiated via glasses continue in-app or on desktop.

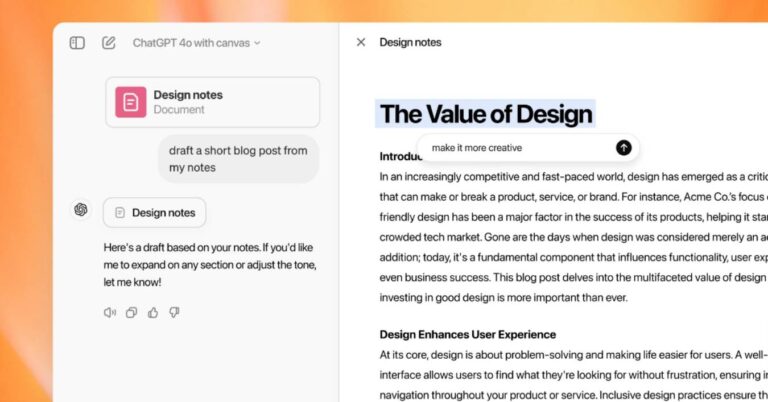

Web Experience Enhancements:

Meta AI’s desktop interface now includes upgraded voice features, image generation, and document capabilities. Users can create, edit, and export documents, while testing is underway for import functionality.

Privacy, Monetization, and Controls:

Users can manage microphone and sharing preferences. Meta Launches Standalone AI App while maintaining platform-wide privacy controls. A paid subscription model for premium features will be tested in Q2 2025 but is not expected to deliver revenue until 2026.

Current Reach, Competitive Landscape, and Vision:

With nearly 1 billion users already using Meta AI across search bars and apps, Meta Launches Standalone AI App as a direct response to offerings like ChatGPT, Gemini, Grok, and Claude. Meta plans to lead through personalization, social data, and massive infrastructure investments ($65B projected in 2025).

Why This Matters:

Meta Launches Standalone AI App to establish dominance in the rapidly evolving AI assistant space. With integrated social features, contextual memory, and platform-wide voice access, Meta is shaping a unique AI ecosystem across devices. The app’s design, reach, and personalization capabilities distinguish it from rivals and align with Meta’s broader strategy to redefine digital interaction.

Meta’s Llama models reach 1 Billion downloads, driven by enterprise adoption and open-source tools, despite lawsuits and EU regulatory hurdles.

Read a comprehensive monthly roundup of the latest AI news!