OpenAI has announced several new models and developer products at DevDay, including GPT-4 Turbo with 128K context, a new Assistants API, GPT-4 Turbo with Vision, DALL·E 3 API, and more.

GPT-4 Turbo Introduction – Key Points

Notable announcements include:

- New Additions and Improvements: OpenAI has made several significant additions and improvements to its platform. These changes are designed to enhance the capabilities of AI models and make them more accessible to developers.

- GPT-4 Turbo: OpenAI has introduced GPT-4 Turbo, the next generation of its model. It boasts increased capabilities, with knowledge of world events up to April 2023. One of its notable features is a 128k context window, allowing it to process a vast amount of text in a single prompt. Importantly, OpenAI has optimized its pricing, making GPT-4 Turbo significantly more affordable than its predecessor.

- Function Calling Updates: Function calling, a feature that allows developers to describe functions to models, has seen improvements. Developers can now call multiple functions in a single message, streamlining interactions. Additionally, the accuracy of function calling has been enhanced, resulting in more accurate function parameter outputs.

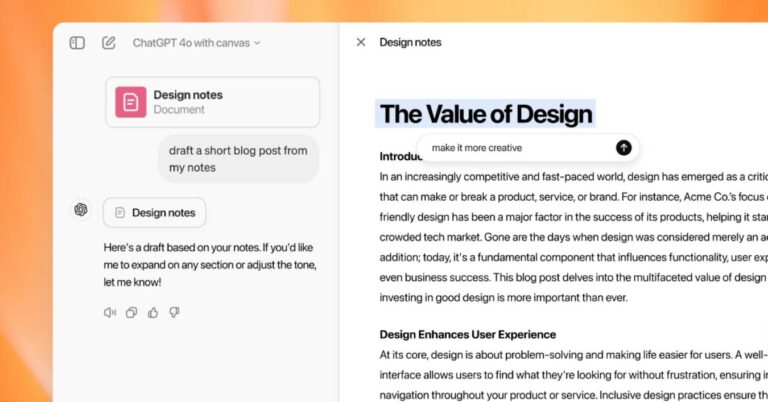

- Improved Instruction Following and JSON Mode: GPT-4 Turbo excels at tasks that require precise instruction following, making it a valuable tool for generating specific formats, such as JSON. This capability is further extended with a JSON mode that ensures syntactically correct JSON output, useful for developers working with JSON in the Chat Completions API.

- Reproducible Outputs and Log Probabilities: OpenAI has introduced a new seed parameter to enable reproducible outputs. This feature is valuable for debugging and maintaining control over model behavior. Furthermore, OpenAI plans to provide log probabilities for output tokens, facilitating features like autocomplete in search experiences.

- Updated GPT-3.5 Turbo: In addition to GPT-4 Turbo, OpenAI has released an updated version of GPT-3.5 Turbo. This new iteration supports a 16K context window by default and shows a 38% improvement in tasks like generating JSON, XML, and YAML. Developers can access this version via the API.

- Assistants API: OpenAI has introduced the Assistants API, allowing developers to create agent-like experiences within their applications. This API offers various capabilities, including Code Interpreter, Retrieval, and function calling, reducing the complexity of building high-quality AI applications.

- New Modalities: OpenAI’s platform now supports new modalities, including GPT-4 Turbo with Vision, which can process images in the Chat Completions API. DALL·E 3, a tool for image creation, is available through the Images API, and developers can generate human-quality speech from text using the text-to-speech (TTS) API.

- Lower Prices and Higher Rate Limits: OpenAI has lowered prices for several models, making them more cost-effective for developers. The platform also offers higher rate limits, enabling scalability for applications.

- Copyright Shield: OpenAI has introduced Copyright Shield, pledging to defend customers and cover legal costs in cases of copyright infringement claims related to ChatGPT Enterprise and the developer platform.

- Whisper v3 and Consistency Decoder: Whisper large-v3, an improved version of OpenAI’s automatic speech recognition model, is introduced. The Consistency Decoder, an open-source replacement for Stable Diffusion VAE decoder, enhances image quality and performance.