OpenAI, recognizing the burgeoning risks associated with advanced AI models, has implemented a comprehensive Preparedness Framework. This strategic move is aimed at tracking, evaluating, and mitigating potential catastrophic risks, ensuring safer development and deployment of AI technologies.

OpenAI’s Preparedness Framework – Key Points

- Expansion of Safety Processes: OpenAI has broadened its safety protocols to prevent harmful AI developments, including establishing a safety advisory group and granting veto power to the board.

- Updated Preparedness Framework: The Framework is designed to systematically address catastrophic risks, employing rigorous evaluations and forecasts.

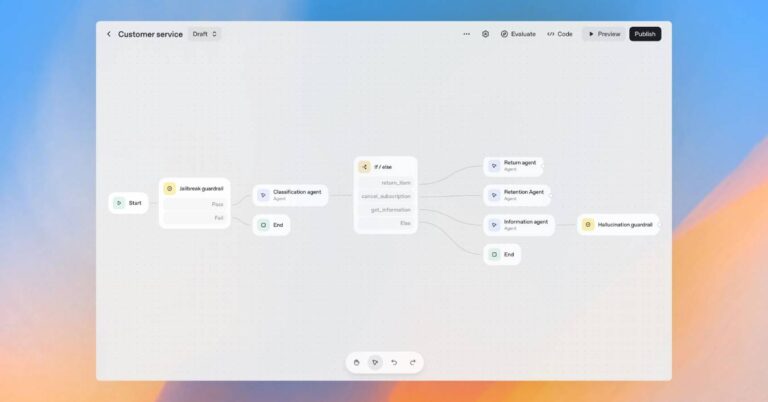

- Diverse Safety Teams: OpenAI employs various teams, each focusing on different AI safety aspects, such as the Safety Systems team for current models and the Superalignment team for future superintelligent models.

- Risk Evaluation and Mitigation: The Framework includes evaluating all frontier models, especially during significant computational power increases during training, to assess and mitigate risks.

- Decision-Making Structure: A dedicated team oversees technical work and safety decision-making, with the board having the power to reverse leadership decisions.

- Public and Political Concerns: Since ChatGPT’s launch, AI’s potential to spread disinformation and manipulate humans has been a concern for the public, politicians, and researchers.

- Pause in Development Requested: AI industry leaders have requested a pause in developing systems more powerful than GPT-4, highlighting societal risks.

- Scorecards and Risk Thresholds: OpenAI will continually update model scorecards and has set risk thresholds to trigger safety measures. Only models with acceptable post-mitigation scores will be developed or deployed.

- Global AI Regulation: The EU’s initiation of AI regulation reflects a growing global trend towards governing AI technology, with OpenAI aligning its practices accordingly.

- Internal Turmoil and Governance Challenges: The company faced turmoil with the removal of CEO Sam Altman from the board and subsequent staff reactions, underscoring the challenges in AI governance.

- External Collaboration and Accountability: OpenAI plans to collaborate with external parties for risk tracking and will implement independent audits to maintain accountability.

Sources

- “OpenAI buffs safety team and gives board veto power on risky AI | TechCrunch”, TechCrunch, Link

- “OpenAI outlines AI safety plan, allowing board to reverse decisions | Reuters”, Reuters, Link

- “Preparedness”, OpenAI, Link

- “ChatGPT maker OpenAI gives its board the power to veto harmful AI as EU begins regulation process | Euronews”, Euronews, Link