GPT-4o: Why It’s the Best AI Model You Can Use Today

OpenAI has rolled out its most advanced model yet, GPT-4o, now available for both free and premium users, enhancing the interaction speed and accuracy. The “o” in GPT-4o signifies its omnidirectional capability, merging voice, text, and visual data into a singular, cohesive model. While free users will face some usage limitations, premium users will enjoy extended features. OpenAI’s CEO, Sam Altman, described the new model, saying, “It feels like AI from the movies… Talking to a computer has never felt really natural for me; now it does,” adding, “We are a business and will find plenty of things to charge for.”

Key Points

- Introduction of GPT-4o: OpenAI launched its latest AI model, GPT-4o, which will be available to free users, enhancing speed and accuracy. The “o” stands for omni, integrating voice, text, and vision capabilities into a single model. Sam Altman, OpenAI CEO, commented on the new model, saying, “It feels like AI from the movies… Talking to a computer has never felt really natural for me; now it does.”

- New Features: Updates include improved international language support, the ability to analyze images, audio, and text documents, and advanced voice capabilities. GPT-4o can handle real-time text, audio, and video inputs, responding to audio inputs in as little as 232 milliseconds, similar to human conversation speed. The new audio capabilities enable users to speak to ChatGPT and obtain real-time responses with no delay, as well as interrupt ChatGPT while it is speaking, both hallmarks of realistic conversations that AI voice assistants have found challenging.

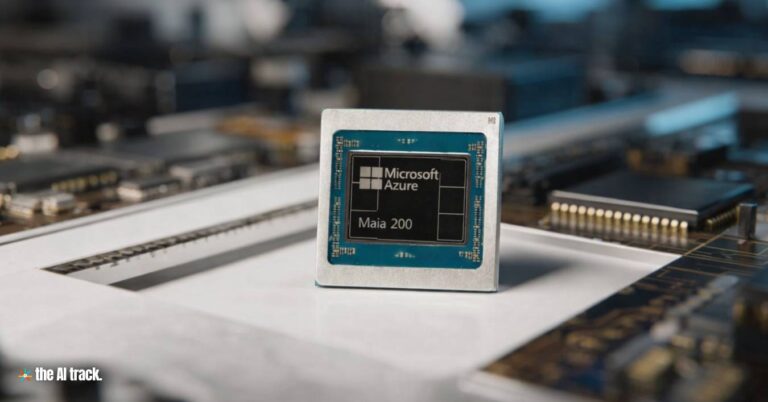

- Model Capabilities: GPT-4o matches GPT-4 Turbo in text and coding performance, with significant improvements in non-English languages, vision, and audio understanding. It offers a 50% reduction in API costs and is twice as fast, making it a more efficient and cost-effective option. Additionally, GPT-4o is trained on data up until October 2023, ensuring that it has access to the most recent information available. Developers will also have access to the API, which is half the price and twice as fast as GPT-4 Turbo, enhancing cost-effectiveness and usability for developers. During the demo, the model interpreted an OpenAI employee’s emotion based on a selfie, demonstrating its advanced visual processing capabilities.

- Demonstrations: Live demos showcased the AI’s ability to create emotive voice responses, solve math problems via camera input, analyze facial expressions, and provide real-time translation between languages. Some glitches occurred, including audio cutouts and unexpected responses. During the livestream, OpenAI demonstrated the new voice features by asking it to tell a bedtime story in different styles and expressions, including a robotic voice and a sing-song one. The model was also able to describe what it saw on a phone camera and continue speaking after being interrupted without needing further prompting. The demonstration also included live, instantaneous language translation, showcasing the model’s ability to handle real-time communication seamlessly.

- Voice and Interaction: GPT-4o offers real-time translations and the ability to interrupt the bot with new queries for dynamic interactions. It can respond to verbal questions with audio replies almost instantly.

- Access and Availability: The new features will be gradually rolled out to free users, with certain capabilities already available to paying ChatGPT Plus and Team users. The GPT Store will be accessible to all users, offering custom chatbots previously limited to paid customers. In addition, free ChatGPT users now have access to a “browse” feature that enables ChatGPT to display up-to-date information from the web. The text and image capabilities of GPT-4o are available immediately, while the voice feature will be rolled out iteratively in a limited alpha release, starting with a small group of trusted partners to prevent misuse. Furthermore, a desktop app for GPT-4o is also incoming, expanding the ways users can interact with the model.

- Safety Measures: OpenAI is rolling out features gradually to prevent misuse, particularly in voice and facial recognition. Extensive safety measures, including filtering training data and refining model behavior, are in place to ensure responsible use. OpenAI’s chief technology officer, Mira Murati, emphasized that the new model would be offered for free because it is more cost-effective than the company’s previous models. Despite these measures, OpenAI acknowledged the new safety challenges posed by the audio component and mentioned the establishment of vague safety guardrails to protect against misuse. Additionally, the use of GPT-4o in workplace and education settings is prohibited by the EU AI Act due to these safety concerns.

- Competitive Landscape: OpenAI’s announcement coincides with growing competition from other AI firms like Google, Anthropic, and Cohere, who are also releasing advanced models. Google is expected to unveil more AI updates at their upcoming I/O conference. OpenAI made these announcements a day before Alphabet is scheduled to hold its annual Google developers’ conference, where it is expected to show off its own new AI-related features. This strategic timing underscores the competitive landscape and OpenAI’s intent to maintain its edge in the AI race.

- Speculations and Future Plans: Speculation about OpenAI’s next moves includes the potential development of a search product to rival Google. Reports also suggested the possibility of a voice assistant baked into GPT-4, but the launch ultimately focused on GPT-4o. This strategic timing, just ahead of Google’s I/O conference, underscores the competitive landscape. CEO Sam Altman hinted at more forthcoming innovations but quelled rumors about an imminent launch of GPT-5. Altman also posted on X after the demo, referring to the film “Her,” highlighting the natural and engaging conversation with GPT-4o.

- Partnerships and Legal Issues: OpenAI is negotiating to integrate its AI with Apple’s iPhone OS and faces lawsuits over alleged copyright violations. The company continues to expand its product offerings and develop new AI technologies. Shortly after launching in late 2022, ChatGPT was called the fastest application ever to reach 100 million monthly active users. However, worldwide traffic to ChatGPT’s website has been on a roller-coaster ride in the past year and is only now returning to its May 2023 peak.

Sources

- Hello GPT-4o | OpenAI, 13 May 2024