The release of DeepSeek V3-0324 model, with improved reasoning and coding capabilities, positions it as a cost-effective alternative to Western AI leaders like OpenAI and Anthropic.

DeepSeek V3-0324 AI Model Release – Key Points

The Big Picture

Chinese AI startup DeepSeek has unexpectedly released DeepSeek V3-0324, an upgraded cutting-edge large language model (LLM) that not only rivals top-tier AI systems like OpenAI’s ChatGPT and Anthropic’s Claude—but does so while being freely available for anyone to use, modify, and build upon. What makes this release groundbreaking? It combines unprecedented efficiency, low-cost development, and open accessibility, challenging the dominance of U.S. tech giants and reshaping how AI evolves globally.

The significance of DeepSeek’s impact is now evident in the wider geopolitical response. The model’s predecessor, R1, was developed at a cost of only $5.6 million, a figure that shocked the global tech sector and led to massive U.S. countermeasures, including the launch of the $500 billion Stargate initiative. As a result, Chinese tech giants like Baidu and Alibaba have accelerated their own open-source AI strategies, amplifying China’s momentum in the global AI race.

Microsoft CEO Satya Nadella also highlighted DeepSeek’s influence during a recent internal town hall. He cited DeepSeek’s R1 as a benchmark for efficiency and innovation, framing it as a catalyst for Microsoft’s AI-first strategy. Nadella connected this to Microsoft’s $80 billion AI investment, aiming to infuse next-gen AI features across its entire software ecosystem.

Why DeepSeek V3-0324 Stands Out

1. Blazing Speed on Consumer Hardware

- Unlike most advanced AI models that require expensive data center GPUs, DeepSeek V3-0324 runs at 20 tokens per second on Apple’s Mac Studio with an M3 Ultra chip—a high-end but still consumer-friendly machine.

- A 4-bit compressed version reduces its size to 352GB, making it feasible for tech-savvy users to run locally.

- This efficiency means businesses and developers can avoid costly cloud fees while still accessing top-tier AI performance.

2. A Fraction of the Cost, Comparable Power

- DeepSeek built this model for just $5.6 million, a tenth of what Meta spent training its similarly capable Llama models.

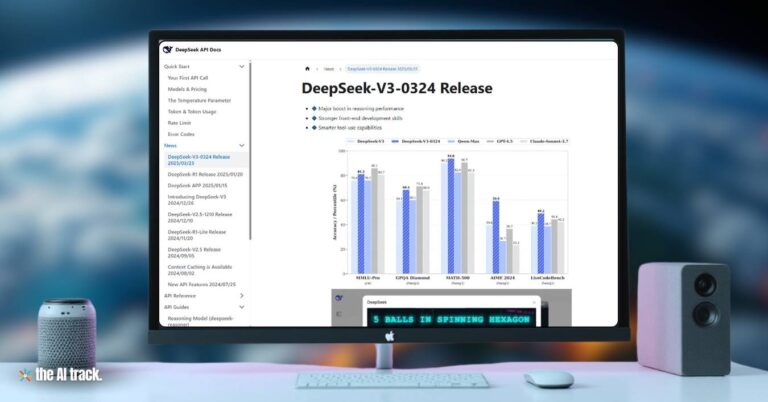

- Despite the lower budget, it matches or exceeds Claude 3.7 Sonnet in coding tasks and competes with ChatGPT’s o3-mini in reasoning benchmarks.

- This cost efficiency could democratize AI development, allowing startups and researchers to innovate without billion-dollar budgets.

- Apple CEO Tim Cook publicly praised DeepSeek’s innovation, calling its models “excellent” and highlighting their role in driving efficiency. He reiterated support for AI that advances performance while cutting costs during Apple’s Jan. 30 earnings call.

3. Cutting-Edge AI Architecture

- Mixture of Experts (MoE) Design – Instead of using all 685 billion parameters at once (like most AI models), DeepSeek V3-0324 activates only 37 billion per task, drastically improving efficiency.

- 131,000-Token Memory – Can process longer documents (like research papers or legal contracts) without losing track of context.

- Multi-Token Prediction – Generates multiple words at once, making responses 80% faster than traditional models.

- Multi-Head Latent Attention – Enhances logical reasoning, making it better at solving complex problems.

4. Open-Source Advantage

- Unlike OpenAI, Google, or Anthropic, which keep their best models locked behind paywalls, DeepSeek released V3 open-source, under an MIT License—meaning anyone can use it for free, even in commercial products.

- This approach has already inspired other Chinese tech giants (Baidu, Tencent, Alibaba) to release their own open models, accelerating AI innovation.

- Some experts compare this to Android’s rise—where open-source software eventually dominated over closed ecosystems like Apple’s iOS.

What This Means for the Future of AI

✅ A Challenge to U.S. AI Dominance

- Just a year ago, experts believed China was 1-2 years behind the U.S. in AI. Now, that gap has shrunk to just 3 months.

- If DeepSeek’s upcoming reasoning model (R2, expected April 2025) outperforms GPT-5, it could mark a major shift in AI leadership.

- The R1 model’s vulnerabilities, exposed by cybersecurity firm KELA, sparked national security concerns in the U.S. due to its potential to generate malicious content. This escalated scrutiny over low-cost open models in military and cybersecurity circles.

✅ A New Era of Local AI

- Since DeepSeek V3-0324 can run on consumer hardware, it reduces reliance on expensive cloud services—potentially changing how businesses deploy AI.

- Developers can now fine-tune and customize powerful AI without needing Nvidia’s most advanced GPUs.

- The shift to reasoning-based models like DeepSeek’s is influencing global AI investment trends, with major players like Amazon, Meta, and Microsoft reallocating budgets from chip infrastructure to inference spending. In 2025 alone, they’re projected to spend $371 billion, with that number expected to grow to $525 billion annually by 2032.

✅ Big Questions for OpenAI & Co.

- If open models keep improving, will companies like OpenAI need to lower prices or open up their technology to stay competitive?

- Could this push the U.S. to embrace more open AI development to keep up with China’s rapid progress?

Final Thoughts

DeepSeek V3-0324 isn’t just another AI model—it’s a game-changer in cost, accessibility, and performance. By proving that high-efficiency, open-source AI can rival the best proprietary systems, it forces a rethink of how AI should be developed and shared.

For developers, this means more freedom to innovate. For businesses, it means cheaper, faster AI tools. And for the tech world, it signals a new phase in the global AI race—one where openness and efficiency might just beat deep pockets and secrecy.

DeepSeek’s rise has also triggered global reactions beyond the tech sector. On LinkedIn and Reddit, discussions are surging in tech policy, cybersecurity, and developer communities. Meanwhile, China’s advances in AI training (possibly tied to Baidu) continue to fuel debate across platforms like X, where #ChinaAI amassed over 7,000 mentions, and LinkedIn saw 5,000+ engagements on related reports. The debut of V3 even contributed to market fluctuations in companies like Nvidia, Apple, and Meta—highlighting its economic ripple effects. The world is watching—and recalibrating.

Explore the key differences between open source vs closed source AI, including their benefits, challenges, and implications for the future of AI

Read a comprehensive monthly roundup of the latest AI news!