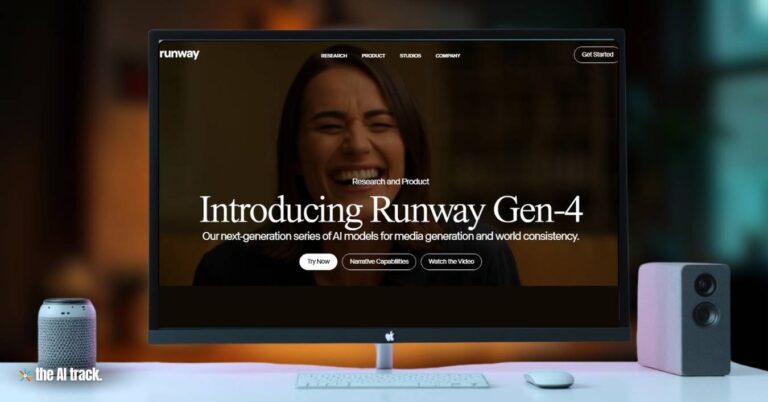

Runway has launched Gen-4, a next-generation AI model capable of producing high-fidelity videos with unprecedented consistency in characters, locations, and objects across scenes. The model marks a major leap in controllable, production-ready AI video generation, with superior narrative fidelity and world physics understanding.

Runway Released Gen-4 – Key Points

Introduction of Gen-4:

On March 31, 2025, AI startup Runway released Gen-4, a new family of video-generating AI models. Designed to maintain consistency across frames and regenerate scene elements from various angles, Gen-4 targets both individual creators and enterprises.

Enhanced Capabilities:

Gen-4 allows users to define a “look and feel” using reference images and textual instructions, generating media that preserves style, mood, lighting, and cinematic tone without additional training or fine-tuning. World coherence and visual continuity are central to its function.

Technological Advancements:

The model supports consistent characters and objects across vastly different environments. A single reference image can render characters across varied lighting, poses, and treatments. Users can also create object-consistent outputs, ideal for both storytelling and product visuals.

Scene Control and Perspective:

With Gen-4, users can generate different camera angles and scene coverage from a single prompt or image set. This enables dynamic visual storytelling, previously limited to manual shooting or VFX-heavy pipelines.

Physics and World Understanding:

Gen-4 integrates physics-simulating capabilities, enabling realistic motion and interactions between elements in a scene. It sets a new benchmark in visual generative models, bringing AI closer to “universal generative models” that understand real-world logic and movement.

GVFX Integration (Generative VFX):

Gen-4’s outputs can seamlessly integrate with live-action, animation, and traditional VFX footage. Its fast and flexible generative workflow supports industry-grade compositing and hybrid storytelling.

Narrative Testing with Short Films:

Runway showcased Gen-4’s storytelling power via a curated collection of short films and music videos created entirely using the model, including titles like Lonely, Herd, Retrieval, NY, and Vede.

Industry Collaborations and Funding:

Backed by Salesforce, Google, and Nvidia, Runway continues to position itself as a leader in generative video AI. It has signed production deals with Hollywood studios and committed millions through its “Hundred Film Fund” to back 100 films using Gen-4.

Legal Considerations:

Like other generative AI companies, Runway faces legal pressure from visual artists over model training practices. It invokes the fair use doctrine in its defense, though the outcomes of pending lawsuits remain uncertain.

Why This Matters:

Gen-4 elevates the bar for AI video tools, offering filmmakers, advertisers, and digital creators granular control over visuals, narrative continuity, and realism. With potential to reshape creative pipelines, reduce production costs, and redefine industry roles, Gen-4 underscores the growing influence—and legal tension—of AI in mainstream content creation.

AI is revolutionizing filmmaking and content creation! This comprehensive guide compares the top 20 text-to-video tools, highlighting their strengths, and limitations

Read a comprehensive monthly roundup of the latest AI news!