The Biden administration’s AI National Security Memorandum (NSM) represents the most comprehensive U.S. policy on AI as it relates to national security, aiming to maintain U.S. leadership in AI, prioritize responsible governance, and foster industry, academic, and ally collaboration. Recognizing AI as transformative, the NSM cautions that without careful management, AI could weaken U.S. security, human rights, and democratic values worldwide.

Biden AI National Security Memorandum Explained

Emphasis on Frontier AI Models:

In contrast to prior strategies, the AI National Security Memorandum (NSM) emphasizes “frontier AI models” — highly versatile systems like OpenAI’s GPT-4 and Google’s Gemini. These models hold critical security importance due to their broad applications. By focusing on developing and regulating these systems, the NSM seeks to secure a strategic U.S. advantage.Historical Comparisons and Shifts:

Drawing on comparisons to groundbreaking technologies such as nuclear and space advancements, the NSM likens AI’s potential impact to that of the Cold War era. Though not advocating an arms race, the NSM underlines AI as transformative, warranting a national strategy akin to previous strategic documents like NSC-68.Collaboration Imperative:

Key to AI leadership, the U.S. seeks to enhance partnerships with the private sector, academic institutions, and allies like the UAE, as well as industry leaders Google and Microsoft. These alliances aim to attract talent, bolster capabilities, and anchor AI advancements to democratic values.Infrastructure Expansion:

The NSM stresses rapid AI infrastructure expansion, highlighting “hundreds of gigawatts” of power needs for computational growth. APNSA Sullivan warned that without such developments, U.S. AI leadership could be jeopardized. The NSM encourages federal agencies to streamline permitting and construction for AI-related infrastructure, including clean energy to support this demand.Counterintelligence and Security Risks:

To safeguard AI from adversaries, the NSM directs heightened counterintelligence efforts within the AI sector, noting risks like intellectual property theft and cyberattacks, exemplified by the 2022 Nvidia breach. These measures aim to protect U.S. technological leadership.Three Core National Security Objectives:

- Safe AI Development Leadership: The U.S. aims to be a leader in safe, secure AI development, emphasizing partnerships and positioning the country as a top destination for global talent and infrastructure investment.

- Effective National Security Use: Adapting AI to meet security needs demands technical and policy adjustments, balancing AI use with privacy, human rights, and democratic values.

- International AI Governance: The NSM promotes international AI governance in collaboration with allies to establish global standards, mitigating misuse and upholding democratic principles.

AI Safety and Testing:

The AI National Security Memorandum instructs the U.S. AI Safety Institute (AISI) to oversee voluntary testing of frontier AI models before their release, focusing on capabilities that could pose security threats, like those related to cyber or bioweapon development. The AISI will collaborate with private developers to mitigate risks before public deployment.Global Talent Recruitment:

Recognizing AI talent as a security asset, the NSM pushes for immigration policies that expedite visas for AI experts. An assessment of both domestic and international AI talent markets by the Council of Economic Advisers will further guide recruitment efforts.International Governance Collaboration:

The NSM positions the U.S. to lead in setting global AI norms, working with bodies like the G7 and promoting an adaptable governance framework. The approach contrasts with the EU’s regulatory stance, as the U.S. prioritizes innovation and security alongside democracy. Collaboration with allies remains central, particularly to build global AI consensus.National AI Research Resource (NAIRR):

Expanding the NAIRR is a priority, ensuring under-resourced institutions have the computational resources necessary for AI research. This broadens access beyond well-funded industry players, fostering a competitive research environment.Private Sector Involvement Incentives:

To bolster national security, the NSM recommends revising Department of Defense acquisition policies to ease collaboration for companies new to government projects. Streamlining processes and encouraging private sector participation is key to speeding AI advances in defense.AI and Human Rights:

The NSM addresses potential AI risks to human rights, directing agencies to monitor and mitigate issues like privacy invasion and bias. Civil rights organizations have voiced concerns about AI in security, warning against biases. The administration’s focus includes transparency and adherence to democratic values in AI deployment.

Why This Matters:

The AI National Security Memorandum marks a pivotal step in AI strategy, positioning the U.S. to lead in AI while protecting national security and setting global standards. With its focus on partnerships, infrastructure, talent, and governance, the NSM offers a comprehensive framework for addressing both the opportunities and risks of AI, guiding responsible use and ethical practices on an international scale.

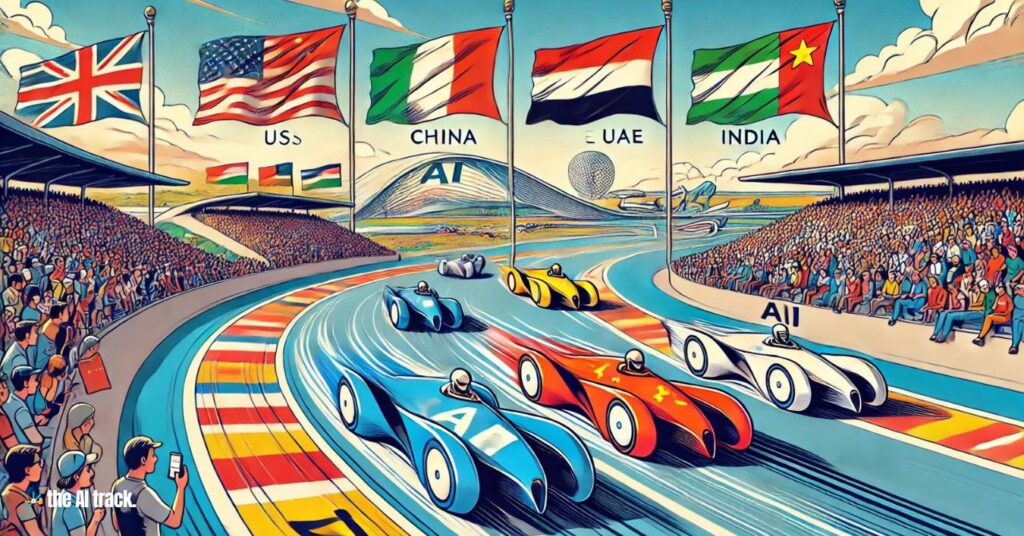

The Quest for AI Supremacy: Strategies for Global Leadership

The pursuit of AI supremacy is underway. The strategies nations are employing to lead in AI, from talent acquisition to ethical frameworks, and their implications.

Read a comprehensive monthly roundup of the latest AI news!