Google I/O 2024 emphasized AI’s integration across its services, particularly with advancements in Gemini AI and introducing groundbreaking technologies like Project Astra for AR glasses.

Google I/O 2024 – Key Points

- Gemini 1.5 Flash and Pro: Google I/O 2024 introduced new Gemini models enhancing speed, efficiency, and capabilities, including a large context window for extensive document handling. Gemini 1.5 Pro boasts the world’s longest context window, allowing it to handle multiple large documents, summarize extensive email threads, and soon analyze video content and large codebases. Gemini 1.5 Flash, optimized for narrow, high-frequency, low-latency tasks, offers faster responses for tasks like translation, reasoning, and coding. Google introduced Gemini Live, a voice-only assistant allowing seamless back-and-forth conversations with the AI, enhancing user interaction with natural language processing. Gemini Advanced users can upload files from Google Drive or their devices for detailed analysis, including generating insights and custom visualizations from data files like spreadsheets. Gemini 1.5 Pro will be integrated into Workspace apps like Docs, Sheets, Slides, Drive, and Gmail, functioning as a general-purpose assistant that can fetch and use information across these platforms. Gemini Nano, now called Gemini Nano with Multimodality, can turn any input (text, photos, audio, web, or live video) into outputs, such as summaries and answers to questions. Gemini 1.5 Pro, Google’s advanced cloud-based AI system, is now available for all developers globally, providing more computing power than other LLMs.

- LearnLM Introduction: Google introduced LearnLM, a new family of models fine-tuned for learning, based on Gemini. LearnLM is designed to enhance educational experiences by integrating learning science principles and providing personalized, engaging, and useful learning support.

- Project Astra: Google I/O 2024 introduced an AI that integrates with AR glasses to offer real-time, contextual interactions, aiming to transform everyday experiences with minimal response lag, positioning Google at the forefront of wearable AI technology. Demis Hassabis, head of Google DeepMind, has long envisioned a universal AI assistant. Project Astra aims to fulfill this vision by offering real-time, multimodal assistance, akin to the Star Trek Communicator or the voice from Her. During the Google I/O demo, Astra demonstrated impressive real-time capabilities, identifying objects, locating missing items, and reviewing code in a conversational manner. Project Astra, a multimodal AI assistant, can understand and act on inputs from a phone camera, recognizing objects and providing detailed information about the surroundings.

- AI Integration in Google Search: Expanded the Search Generative Experience to all U.S. users, incorporating AI-generated insights directly into search results. Hassabis noted that Astra represents a significant advancement in AI agent technology, building on Google’s history with agents like AlphaGo. These agents range from simple task-oriented tools to sophisticated collaborators. AI Overviews, formerly known as ‘Search Generative Experience,’ will be available to all U.S. users, providing summarized answers from the web. Google’s new AI-organized search delivers more readable results and better responses for longer queries. AI overviews provide summarized answers from multiple sources, enhancing the search experience. Google’s new search feature provides AI-generated automatic answers to complex queries, reducing the need for multiple searches and providing immediate, accurate information.

- LearnLM Integration into Google Products: LearnLM enhances learning experiences in products like Google Search, YouTube, and Gemini. Users can adjust AI Overviews in Search for simplified language or detailed breakdowns, ask clarifying questions while watching educational videos on YouTube, and use custom Gems in Gemini for personalized learning guidance.

- Advancements in Gmail: Gemini AI is set to revolutionize how users interact with email through features like email summarization, enhanced reply suggestions, and in-depth search within email content, making email management more efficient. Starting today, Gemini AI will be integrated into Workspace apps like Gmail, Google Drive, Docs, Sheets, and Slides. It can help answer questions, craft emails, and summarize documents.

- Google’s AI capability in Pixel camera app: Google I/O 2024 showcased a new AI capability in the Pixel camera app that can recognize objects in real time using voice prompts. This feature enhances the camera’s functionality by providing accurate descriptions of objects in its viewfinder.

- Cross-Platform Bluetooth Tracker Protections: In a collaboration with Apple, Google I/O 2024 introduced cross-platform protections against unwanted Bluetooth tracking. Users will receive alerts if an unknown tracker is detected, enhancing personal security across both Android and iOS devices.

- Veo and Imagen 3: Announced new generative models for creating video and images, pushing the limits of AI in media creation. The new Veo model can generate 1080p video based on text, image, and video prompts, and is being offered to creators for use in YouTube videos and Hollywood films. SynthID will embed watermarking into AI-generated videos created with the Veo model, ensuring content authenticity and helping to detect misinformation and deepfakes. Google introduced VideoFX, a generative video model that creates 1080p videos based on text prompts, and DJ Mode in MusicFX, allowing musicians to generate song loops and samples.

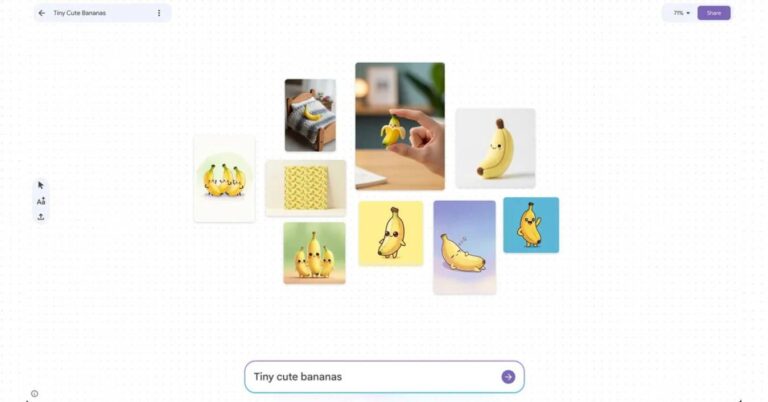

- Google Photos Upgrades: The introduction of ‘Ask Photos’ allows users to search for specific images within Google Photos using natural language queries, enhancing the usability of photo libraries through advanced AI search capabilities. The ‘Ask Photos’ feature enables Gemini to search through Google Photos and respond to specific queries, providing detailed information such as license plate numbers directly from photos.

- TalkBack Features: Google is enhancing its accessibility features with Gemini Nano, providing richer, clearer descriptions for images to aid blind and low-vision users, demonstrating the company’s focus on accessibility. Gemini Nano on Android phones will help detect scam calls by identifying common scammer conversation patterns and providing real-time warnings.

- Music AI Sandbox: A new tool for musicians, the Music AI Sandbox allows the creation of music loops from AI prompts, reflecting Google’s ongoing innovation in creative AI fields.

- Project Gameface on Android: Originally a hands-free gaming mouse, Project Gameface has been extended to Android, enabling users to control devices through facial gestures and head movements, with open-source code available for developers.

- Tensor-Powered AI Features in Pixel 8a: Google’s recently announced Pixel 8a features Tensor-powered AI capabilities, providing advanced functionality in a sub-$500 device. This includes enhanced software and security support, demonstrating Google’s commitment to making AI accessible. Gemini Nano will be integrated into Chrome on desktop, assisting users in generating text for social media posts, product reviews, and more.

- Future Developments: Google is also exploring future applications for Astra, such as a trip planning tool using Gemini to create and edit vacation itineraries. This reflects ongoing research into multimodal models and new form factors for AI assistants. Google hinted at future versions of the Gemini AI model, potentially leading to a new AI-driven Google Assistant called Pixie. This new assistant may debut on upcoming Pixel devices, highlighting Google’s continuous innovation in AI assistants. Gemini Advanced subscribers can create customized chatbots, or ‘Gems,’ tailored to specific needs like coaching or creative guidance.

- Collaborative Efforts for Responsible AI in Education: Google is collaborating with institutions like MIT, Columbia Teachers College, and Khan Academy to develop and test educational benchmarks for AI. This partnership aims to maximize AI’s benefits in education while addressing potential risks.

- Wear OS and AI-Powered Features: Google also discussed upcoming features for Wear OS, its operating system for smartwatches. Expected enhancements include AI-powered capabilities, reflecting the broader integration of AI across Google’s product ecosystem.